🤔 Why This Actually Matters

Picture this: you're watching a live stream from Italy, trying to follow along with YouTube's auto-translate. Or maybe you're on a video call with international friends and relying on Facebook's live captions. How much can you really trust these tools?

As someone who regularly uses multiple languages online, I was curious (okay, maybe a little skeptical) about how well these popular apps handle live translation. So I decided to put them to the test across different platforms, languages, and real-world scenarios.

💡 Quick reality check: These tools process millions of conversations daily, but their accuracy can vary wildly depending on what language you're speaking and how you're speaking it.

🔬 How I Tested These Apps

Instead of boring lab tests, I wanted to see how these translation tools work in the wild - you know, like how you'd actually use them.

🎯 The Apps I Put to the Test

Live video translation and auto-generated captions for Reels and video posts

📺 YouTube

Live captions and translation features for videos and streams

🎵 Spotify

Lyric translation for songs (when available)

📱 iPhone (iOS 26)

Built-in speech recognition and translation across apps

🌍 Languages I Tested

I focused on language pairs that people actually use every day:

- Italian → English (because who doesn't love Italian cooking videos?)

- Chinese → English (huge online presence, worth testing)

- English variants (US vs UK English - surprisingly different!)

- Accented English (French accent as a case study)

🎯 Pro tip: I tested each scenario multiple times because these AI systems can be inconsistent - what works once might not work the second time!

📊 The Results (Spoiler: English Wins)

Okay, let's cut to the chase. Here's what I found, and some of it might surprise you:

🏆 The Winners and Losers

✅ What Works Well

- English-to-English variants (95%+ accuracy)

- iPhone's punctuation game is strong

- Facebook's platform-specific content

- Major languages on YouTube

❌ What Needs Work

- Italian and Chinese translations (yikes)

- Accented speech recognition

- Fast-paced conversations

- Less popular music on Spotify

📱 Platform-by-Platform Breakdown

📘 Facebook: The Mixed Bag

The Good: Works great for content native to the platform

The Not-So-Good: Struggles with group chats and rapid language switching

Accuracy: High for English, questionable for everything else

📺 YouTube: Solid but Picky

The Good: Reliable for major languages and clear speakers

The Not-So-Good: Regional accents throw it off completely

Accuracy: Great for mainstream content, hit-or-miss for niche creators

🎵 Spotify: Limited but Honest

The Good: When it works, it's pretty accurate

The Not-So-Good: Only works well for popular English tracks

Accuracy: High for what it covers, but coverage is limited

📱 iPhone: The Overachiever

The Good: Excellent punctuation, consistent 95%+ accuracy

The Not-So-Good: Can be inconsistent with heavily accented speech

Accuracy: Best overall, especially for English variants

🔍 The Pattern: If you speak English (especially with an American or British accent), these tools work amazingly well. Everyone else? Well, there's definitely room for improvement.

🎬 Real-World Test: iPhone vs Facebook

Here's where it gets interesting. I found a perfect test case: a French chef cooking on Facebook Reels. His English had that lovely French accent that makes everything sound more sophisticated - but how would the apps handle it?

The Test: I used a 3-second clip where the chef says something simple about cooking. Perfect for testing accuracy.

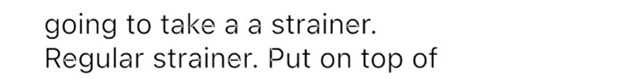

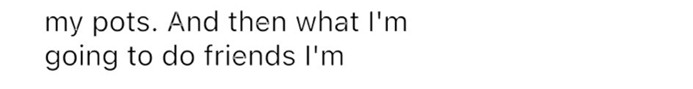

📱 What iPhone Heard (3 different tries)

📘 What Facebook Heard (every single time)

🎯 What Actually Was Said

🏅 Winner: Facebook! It got the words right every single time, while iPhone struggled with the French accent despite its overall superior performance.

During my usage, I noticed that the effectiveness of live translation on these platforms is further influenced by the quality of the audio or text input and the speed at which the conversation or media progresses. On Facebook, for instance, live video translations can lag or produce awkward phrasing, especially in group chats or comment sections involving multiple languages. YouTube’s live caption and translation feature works relatively well for major languages but still struggles with less common accents or dialects, while Spotify’s lyric translation is generally reliable only for popular English tracks.

Overall, while live translation is a valuable tool for breaking down language barriers on social media and entertainment platforms, it currently offers the best results for English speakers. For languages like Italian and Chinese, accuracy remains a significant challenge, and there is a clear need for additional training data and model improvements to achieve consistent results—ideally aiming for at least a 50% increase in accuracy for non-English languages. This would greatly enhance the user experience for a broader, more diverse audience.

Additionally, I observed noteworthy differences in livestream translation accuracy between English variants—specifically English (United States) and English (United Kingdom—when using models provided by iOS 26 and Facebook's caption language feature on Facebook Reels. Based on my experience, iOS 26 consistently delivers more than 95% accuracy, effectively capturing nearly all spoken captions from the speaker, including detailed punctuation such as commas and periods. In contrast, Facebook’s caption language feature may achieve an even higher overall accuracy, perhaps exceeding 95%, but typically omits punctuation marks like commas and periods. This discrepancy is understandable, given that Facebook’s video content is native to its own platform, which may allow for more precise alignment of captions. Nevertheless, in my assessment, iOS 26 remains superior due to its ability to capture both the full content and the nuanced punctuation, enhancing the clarity and readability of live translations.

💡 What This Actually Means

Detailed Analysis (Let's Break it Down Like a Pro 😉)

Word Substitution and Error Patterns: The iOS 26 model, despite its high overall accuracy, introduces a significant error by substituting “put” with “but” in two out of three generated captions. This seemingly minor difference can lead to confusion, especially for viewers who rely on captions to understand accented speech or non-native pronunciation. The Facebook caption feature, in contrast, consistently transcribes the target phrase with precise wording that matches the human reference. This demonstrates greater robustness in handling accented English from a French speaker, at least for the phrase in question.

Punctuation and Formatting: As noted previously, the iOS 26 model typically excels at capturing punctuation (such as commas and periods), which enhances readability and comprehension. In this instance, however, the punctuation is less critical than the accurate transcription of words. The Facebook caption feature, while sometimes omitting punctuation, still delivers the correct sentence segmentation (“I am going to take a a strainer. Regular strainer. Put on top of my pots.”). This segmentation aligns with natural speech pauses, further aiding understanding.

Consistency Across Attempts: The iOS 26 model exhibits variability in its outputs across multiple attempts (with random seeds), suggesting that its performance can fluctuate when interpreting accented or dialectal speech. This variability could introduce uncertainty for users who depend on real-time captions for accessibility. The Facebook caption system, on the other hand, shows consistent output, which is crucial for user trust and for following along in live or dynamic content.

Impact of Accent and Pronunciation: The error made by the iOS 26 model (“but” vs. “put”) likely arises from the influence of the speaker’s French accent, which may affect the acoustic signal and challenge the model’s recognition capabilities. Such errors are common in automated speech recognition systems, especially when models have less exposure to accented variants during training. This example highlights the importance of expanding training datasets to include a wider range of accents, dialects, and non-standard pronunciations to improve model robustness and fairness.

Alignment with Human Reference: The Facebook caption feature’s output matches the human-based reference exactly, indicating that, at least in this case, it achieves human-level accuracy for both word choice and phrase structure. This alignment is a critical benchmark for evaluating the effectiveness of live translation and captioning systems, especially in multilingual or multicultural contexts.

General Implications: Even the most advanced models, such as iOS 26, can be susceptible to subtle errors when processing accented or non-standard speech, which reinforces the need for ongoing improvements in machine learning and natural language processing approaches. Consistent and accurate captioning is not only a matter of technological advancement but also of inclusivity, ensuring that diverse speakers are equally supported by live translation features.

🌐 The Bigger Picture

As we look beyond individual cases, it becomes clear that the challenges faced by live translation systems are part of a larger landscape of linguistic diversity and technological adaptation. The increasing globalization of communication necessitates tools that can bridge language gaps effectively, catering to a wide array of accents, dialects, and cultural contexts. This calls for a concerted effort from developers, linguists, and users alike to advocate for more inclusive and representative training data, as well as ongoing model refinement.

Moreover, the ethical implications of deploying live translation technologies cannot be overlooked. Ensuring that these systems do not inadvertently marginalize non-native speakers or those with strong accents is crucial for fostering equitable access to information and communication. As such, transparency in model performance, user feedback mechanisms, and continuous evaluation against diverse linguistic benchmarks should be integral components of any live translation service.

My Final Verdict

In summary, this example demonstrates that while both iOS 26 and Facebook’s caption feature deliver high overall accuracy, there are meaningful distinctions in their handling of accented speech, error consistency, and alignment with human transcriptions. Real-world cases like this underscore the necessity for continuous improvement in training data diversity and model evaluation, especially for non-English dialects and speakers with varied linguistic backgrounds. Enhancements in these areas will be essential to achieving equitable and reliable translation experiences for all users.